![[interface] image of a healthcare dashboard interface](https://cdn.prod.website-files.com/685faaede6c63f0a35c48896/68a9dda94fc55ef2cc2d72e3_flumes-console.webp)

Structured memory API for LLMs. Store, recall, and assemble prompt-ready context (facts, recent events, and a summary) under a token budget.

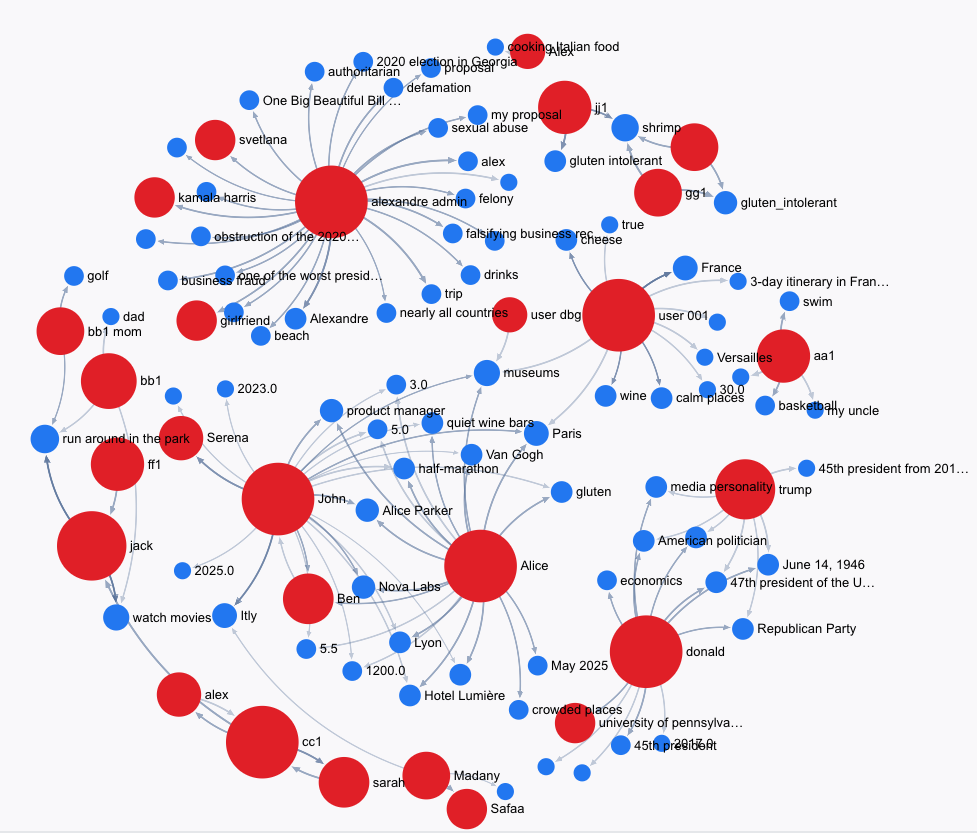

Flumes turns messy history into typed, queryable facts and budgeted context your model can use immediately. Explore the graph to see entities and relationships; track what was retrieved and why.

Flumes handles memory for LLMs: store, retrieve, and manage data with a single API. No manual pipelines or token juggling.

Gain insights into memory usage, set access controls, and manage data efficiently with built-in analytics and admin tools.

Flumes AI streamlines memory for your AI agents—one API, structured layers, and built-in analytics. Simple, scalable, and cost-efficient.

/memories, /recall, /summarize, /prune, /observability/events: a small surface that does the work.

Send a turn; get facts, recent events, summary, sources, token counts, and optional trace.

Greedy packing, dedupe, and supersession to fit a max token budget predictably.

See scores (semantic, BM25, graph, recency), dropped items, and cost signals for every request.

Entity-first facts/events with predicates, confidence, validity windows, and provenance.

PII redaction and EU data residency (beta); audit-friendly logs; VPC/BYO cloud on request (coming soon)

Answers to common questions about Flumes AI’s unified memory infrastructure.

Designed for effortless integration and intelligent scaling.