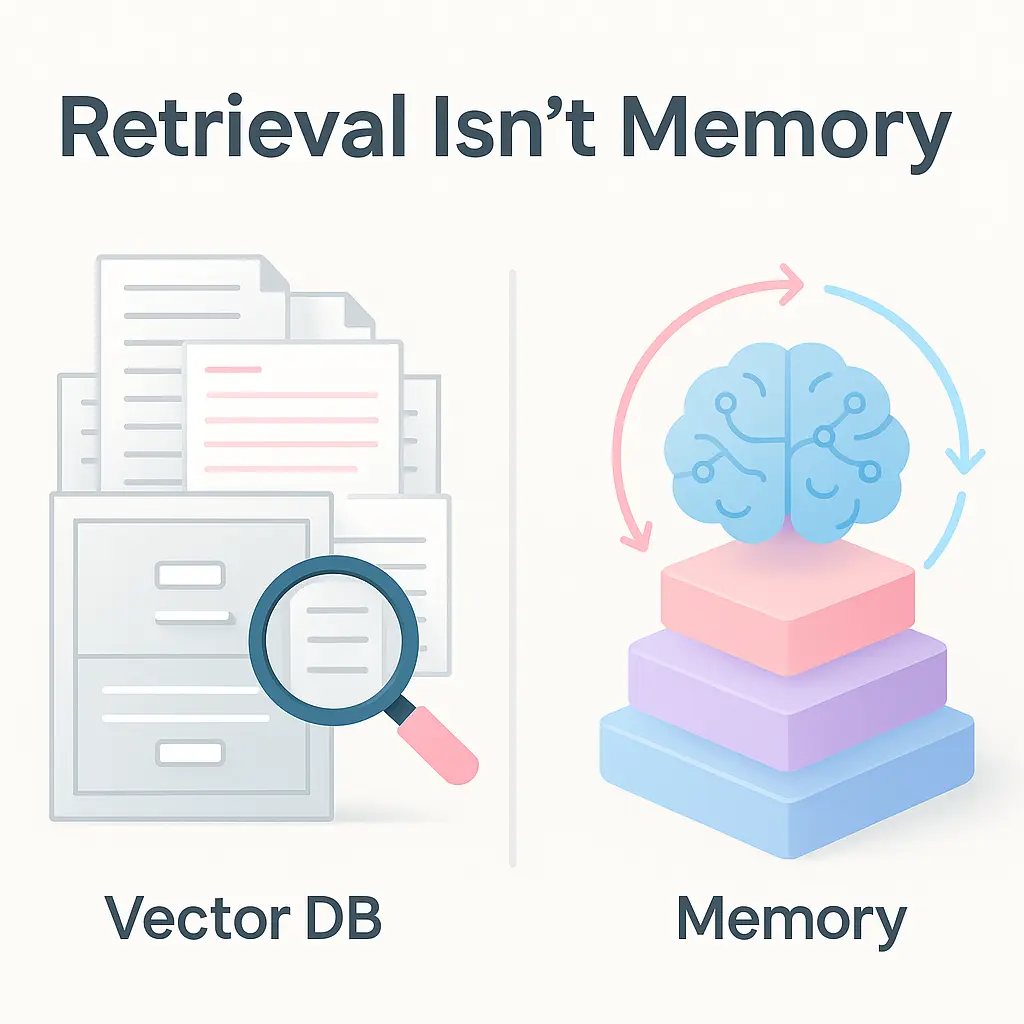

If you’re building LLM apps with vector search, you’re not alone, most devs start there. Vector DBs are great at finding similar chunks of information. But here’s the hard truth:

Retrieval ≠ memory.

Memory is about continuity, reasoning, and state, not just search.

In this post, we’ll break down the difference between retrieval and memory, show you where most stacks fall short, and walk through how to architect long-term memory into your AI agents using the Flumes SDK.

Memory Is About Meaning.

Retrieval is stateless. You ask for something, it finds something “similar.” But memory is:

Example:

vector_db.query("target revenue")

# returns: "We grew 12% last month."

memory_agent.chat("What's our current goal?")

# returns: "We're targeting $1M ARR by Q4."Same query, radically different behavior. The first is a lookup. The second is a conversation with continuity.

A real memory layer should:

That’s what we built Flumes for.

Let’s say you’re building a goal-tracking assistant. Here’s how you give it real memory in 3 lines:

from flumes.agent import Agent

agent = Agent(agent_id="sales_assistant")

agent.remember("We're targeting $1 M ARR by Q4.")

print(agent.chat("What's our current goal?"))

✅ Adds the fact to memory

✅ Retrieves relevant memories

✅ Injects them into the LLM prompt

✅ Logs the response for future use

Memory is now part of the loop, not just a query step.

When you call .remember() or .chat(), Flumes:

If you want direct access to CRUD endpoints:

from flumes import MemoryClient

mc = MemoryClient()

mc.add(messages=[{"role": "user", "content": "Buy milk"}], agent_id="shopping_bot")

hits = mc.search(agent_id="shopping_bot", query="milk")One-to-one with the REST API. Plug in your own backend, use your own retry logic, or add custom summarizers.

We’re not anti-vector. Vector DBs are useful for doc search, external knowledge, RAG workflows. But memory?

That needs to be:

Flumes complements your retrieval layer, it doesn’t replace it. It gives your agents a brain, not just a filing cabinet.

Most LLM stacks are leaking memory by design, they weren’t built to remember. If you want continuity, understanding, and efficiency, you need more than search.

You need memory.

Start with Flumes SDK → Give your agents memory in 3 lines.